AI Video Startup Genmo Launches Mochi 1, an Open Source Rival to Runway, Kling, and Others

Genmo, an AI company focused on video generation, has announced the release of a research preview for Mochi 1, a new open-source model for generating high-quality videos from text prompts and claims performance comparable to, or exceeding, leading closed-source/proprietary rivals such as Runway's Gen-3 Alpha, Luma AI's Dream Machine, Kuaishou's Kling, Minimax's Hailuo, and many others.

Available under the permissive Apache 2.0 license, Mochi 1 offers users free access to cutting-edge video generation capabilities — whereas pricing for other models starts at limited free tiers but goes as high as $94.99 per month (for the Hailuo Unlimited tier). Users can download the full weights and model code free on Hugging Face, though it requires “at least 4” Nvidia H100 GPUs to operate on a user's own machine.

In addition to the model release, Genmo is also making available a hosted playground, allowing users to experiment with Mochi 1's features firsthand.

The 480p model is available for use today, and a higher-definition version, Mochi 1 HD, is expected to launch later this year.

Advancing the state-of-the-art

Mochi 1 brings several significant advancements to the field of video generation, including high-fidelity motion and strong prompt adherence.

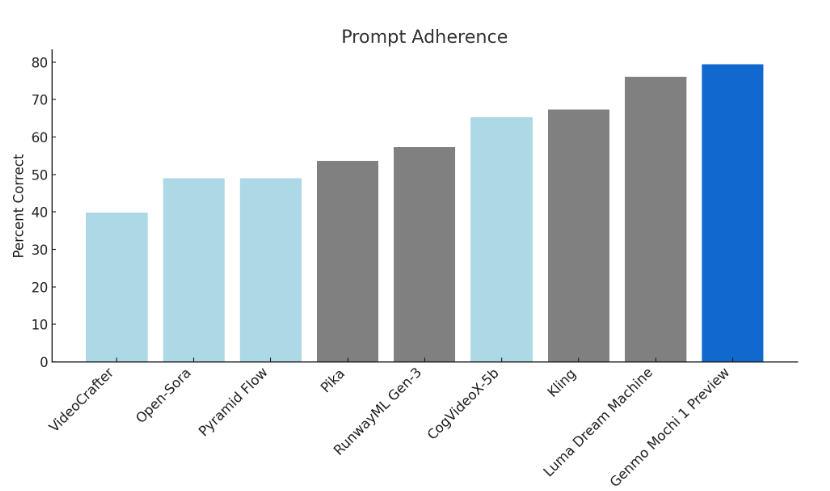

According to Genmo, Mochi 1 excels at following detailed user instructions, allowing for precise control over characters, settings, and actions in generated videos.

Genmo has positioned Mochi 1 as a solution that narrows the gap between open and closed video generation models.

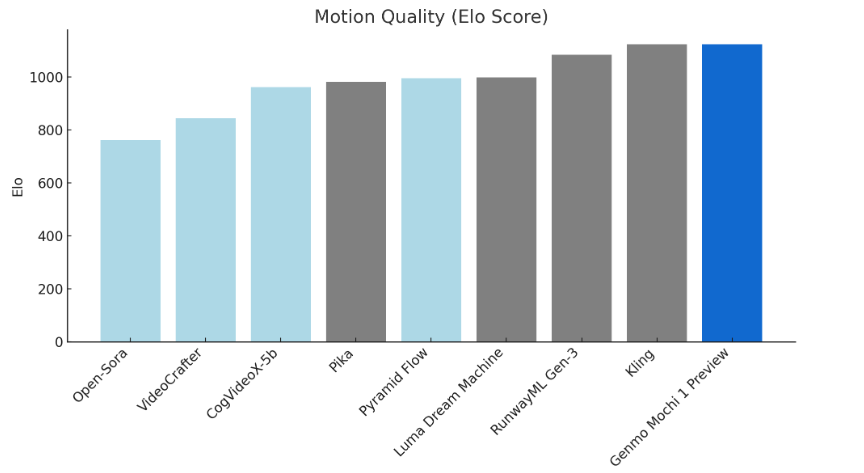

“We're 1% of the way to the generative video future. The real challenge is to create long, high-quality, fluid video. We're focusing heavily on improving motion quality," said Paras Jain, CEO and co-founder of Genmo, in an interview with VentureBeat.

Jain and his co-founder started Genmo with a mission to make AI technology accessible to everyone. "When it came to video, the next frontier for generative AI, we just thought it was so important to get this into the hands of real people,"Jain emphasized. He added,"We fundamentally believe it's really important to democratize this technology and put it in the hands of as many people as possible. That's one reason we're open sourcing it."

Already, Genmo claims that in internal tests, Mochi 1 bests most other video AI models — including the proprietary competition Runway and Luna — at prompt adherence and motion quality.

Series A funding to the tune of $28.4M

In tandem with the Mochi 1 preview, Genmo also announced it has raised a $28.4 million Series A funding round, led by NEA, with additional participation from The House Fund, Gold House Ventures, WndrCo, Eastlink Capital Partners, and Essence VC. Several angel investors, including Abhay Parasnis (CEO of Typespace) and Amjad Masad (CEO of Replit), are also backing the company's vision for advanced video generation.

Jain's perspective on the role of video in AI goes beyond entertainment or content creation. "Video is the ultimate form of communication—30 to 50% of our brain's cortex is devoted to visual signal processing. It's how humans operate," he said.

Genmo's long-term vision extends to building tools that can power the future of robotics and autonomous systems. "The long-term vision is that if we nail video generation, we'll build the world's best simulators, which could help solve embodied AI, robotics, and self-driving,"Jain explained.

Limitations and roadmap

As a preview, Mochi 1 still has some limitations. The current version supports only 480p resolution, and minor visual distortions can occur in edge cases involving complex motion. Additionally, while the model excels in photorealistic styles, it struggles with animated content.

However, Genmo plans to release Mochi 1 HD later this year, which will support 720p resolution and offer even greater motion fidelity.

"The only uninteresting video is one that doesn't move—motion is the heart of video. That's why we've invested heavily in motion quality compared to other models,"said Jain.

Looking ahead, Genmo is developing image-to-video synthesis capabilities and plans to improve model controllability, giving users even more precise control over video outputs.

Expanding use cases via open source video AI

Mochi 1's release opens up possibilities for various industries. Researchers can push the boundaries of video generation technologies, while developers and product teams may find new applications in entertainment, advertising, and education.

Mochi 1 can also be used to generate synthetic data for training AI models in robotics and autonomous systems.

Reflecting on the potential impact of democratizing this technology, Jain said, "In five years, I see a world where a poor kid in Mumbai can pull out their phone, have a great idea, and win an Academy Award—that's the kind of democratization we're aiming for."

Genmo invites users to try the preview version of Mochi 1 via their hosted playground at genmo.ai/play, where the model can be tested with personalized prompts — though at the time of this article's posting, the URL was not loading the correct page for VentureBeat.