Meta Open-sources the Llama3.3-70B-Instruct model

The Llama series of large language models has always been a benchmark of large models in the open-source field, and the Llama3 series of large models has been continuously updated since it was open-sourced. The earliest Llama3 model was open-sourced in April 2024, and since then, a new version has been released almost every three months. Just yesterday, Meta open-sourced the latest Llama3.3-70B model, which is the only open-sourced model in the Llama3.3 series currently. Although the parameter scale of this model is only 70 billion, it has surpassed Llama3.1-405B with a parameter scale of 405 billion in multiple evaluation benchmarks. The latter is one of the largest parameter-scale models in the Llama series and also one of the highest parameter-scale models in the industry's open-source models.

Introduction to Llama3.3-70B-Instruct

Llama3.3-70B-Instruct is currently the only open-sourced model in the Llama3.3 series, and there is no base large model, only the instruction-optimized version of the model is open-sourced.

According to the official introduction, Llama3.3-70B-Instruct is a pre-trained and instruction-fine-tuned model with a parameter scale of 70 billion, and it is a pure-text large language model, which means it does not support multi-modal input and output, only text input and output. However, Llama3.3-70B-Instruct is a multi-language large model, supporting a total of 8 languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai, but not supporting Chinese.

Llama3.3-70B-Instruct is trained on 15 trillion tokens and supports 128K context input. The knowledge date is as of December 2023.

The improvement of the model effect mainly relies on the progress of alignment training technology and reinforcement learning. The official Meta simply mentioned that it is based on synthetic data and has done online preference optimization, which can optimize the model in real-time based on the feedback results during the training process.

In addition, Llama3.3-70B-Instruct supports GQA, that is, Grouped-Query Attention, which reduces the computational complexity of the attention mechanism, which is particularly important for a 70-billion-parameter large model like Llama 3.3. In the reasoning stage, it enables the model to generate text at a higher speed.

Evaluation results of Llama3.3-70B-Instruct

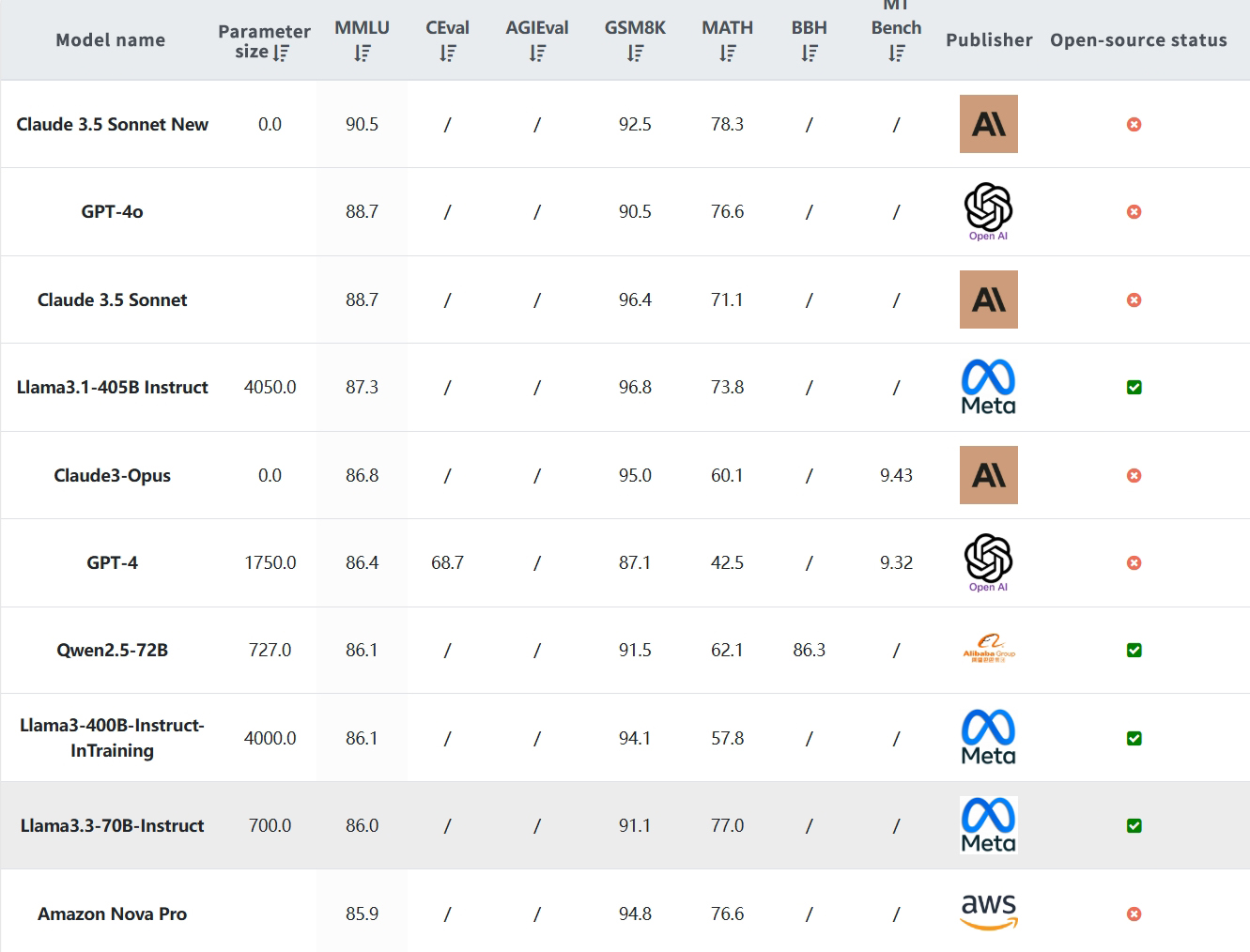

Llama3.3-70B-Instruct has surpassed many open-source and closed-source chat models in multiple industry benchmark tests, showing excellent performance.

Especially, the parameter scale of Llama3.3-70B-Instruct is about 70 billion, but the various evaluation indicators are approximately equal to the Llama3.1-405B model with a parameter scale of 405 billion! This means that Llama3.3-70B-Instruct can generate text faster with fewer resources, but the performance is similar to that of a large model with nearly 6 times the parameter scale!

The following figure shows the comparison results of the Llama3.3-70B-Instruct model and other models in the industry:

It can be seen that the model has achieved the best results in multiple tests, even not lower than the level of GPT-4o.

In the global large model leaderboard collected by DataLearnerAI, ranked by MMLU, the Llama3.3-70B-Instruct model ranks ninth, surpassing Amazon Nova Pro, slightly lower than the Qwen2.5-72B model, but in terms of mathematical logic, its score is 77 points, far exceeding the same type of model, and also much higher than the Qwen2.5-72B model.

The relationship between Llama3.3 and other Llama3 series models

Here is a brief introduction to the model release versions and rhythms of the Llama3 series. Then everyone can understand the position and goal of Llama3.3-70B-Instruct in the Llama series.

Currently, the Llama3 series includes 4 different versions, namely the Llama3 series released in April 2024, the Llama3.1 series released in July 2024, the Llama3.2 series released in September 2024, and this 3.3 series released in early December 2024.

However, in fact, Llama3 and Llama3.1 can be regarded as a relatively normal major version rhythm, because both of these series include multiple different version models with a minimum of 8 billion parameters, a maximum of 70 billion, and a parameter scale of 405 billion.

And the Llama3.2 series actually only released small-scale pure-text language models of 1B and 3B, as well as multi-modal versions of 11B and 90B, basically regarded as a supplement to Llama3.1.

The officially released Llama3.3-70B-Instruct is also introduced as an iteration of post-training technology, which means that its base model may still be Llama3.1-70B, but obtained through different post-training or instruction fine-tuning technology iterations.

Training cost and open-source situation of Llama3.3-70B-Instruct

The information announced by Meta shows that the training of the Llama3.3-70B-Instruct model cost 7 million GPU hours. It is mainly trained on H100-80G, and according to the price of AWS, this cost is about 4.3 million US dollars!

To be honest, this is not something that ordinary people can afford. But the good news is that Llama3.3-70B-Instruct is open-source and supports free commercial use.