Cost Reduced to One Hundred Thousandth! Generating a Week of Atmospheric Simulation Takes Only 9.2 Seconds, Google's Climate Model Published in Nature

On July 23, Google published a paper in Nature introducing the NeuralGCM atmospheric model developed in collaboration with the European Centre for Medium-Range Weather Forecasts (ECMWF). This model combines traditional physics-based modeling with machine learning to improve the accuracy and efficiency of weather and climate predictions.

NeuralGCM's forecasting accuracy for 1 to 15 days is comparable to that of ECMWF, which has the world's most advanced traditional physical weather forecasting model. After incorporating sea surface temperature, NeuralGCM's 40-year climate prediction results align with the global warming trends derived from ECMWF data. Additionally, NeuralGCM outperforms existing climate models in predicting cyclones and their trajectories.

Notably, NeuralGCM is also "far ahead" in speed, capable of generating 22.8 days of atmospheric simulation within a 30-second computation time, with computational costs being 100,000 times lower than traditional General Circulation Models (GCMs). As the first climate model based on machine learning, NeuralGCM elevates weather forecasting and climate simulation to a new level in terms of both prediction accuracy and efficiency.

I. Machine Learning Drives the Transformation of Climate Models

The Earth is warming at an unprecedented rate, and extreme weather events have become more frequent in recent years. The World Meteorological Organization has stated that 2023 is the hottest year on record, with 2024 potentially being even hotter. In the context of frequent extreme weather, the importance of climate forecasting is particularly highlighted.

General Circulation Models (GCMs) are the foundation of weather and climate predictions. They are traditional models used to simulate and predict the Earth's atmosphere and climate system based on physics. By simulating physical processes in the Earth's atmosphere, oceans, land, and ice caps, GCMs can provide long-term weather and climate forecasts. Despite continuous improvements over decades, traditional climate models often produce errors and biases due to scientists' incomplete understanding of how the Earth's climate operates and how models are constructed.

Stephan Hoyer, a senior engineer at Google, explained that traditional GCMs divide the Earth into cubes extending from the surface to the atmosphere, typically with a side length of 50-100 kilometers, and predict weather changes within each cube over a certain period. They derive predictions based on physical laws that calculate the dynamic changes of air and moisture. However, many important climate processes, such as cloud formation and precipitation, occur on scales ranging from millimeters to kilometers, much smaller than the cube sizes used in current GCMs, making accurate calculations based on physics impossible.

Moreover, scientists lack a complete physical understanding of certain processes, such as how clouds form. Therefore, these traditional models do not rely entirely on physical principles but instead use simplified models to generate approximations, parameterizing weather dynamics. This approach reduces the accuracy of GCMs.

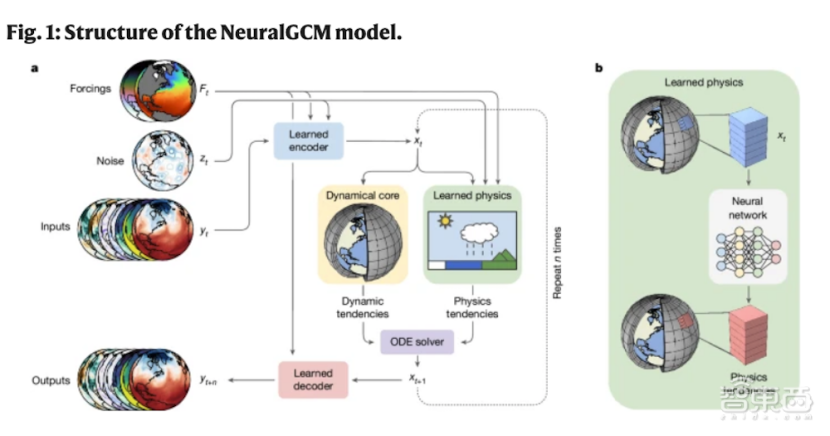

Like traditional models, NeuralGCM divides the Earth's atmosphere into cubes and calculates the physical characteristics of large-scale changes such as air and moisture movement. However, for small-scale weather dynamics like cloud formation, NeuralGCM does not use traditional parameterization; instead, it employs neural networks to learn the physical characteristics of these weather dynamics from existing weather data.

Hoyer revealed that a key innovation of NeuralGCM is that Google has rewritten the numerical solver for large-scale change processes from scratch in JAX, enabling the model to perform "online" adjustments using gradient-based optimization. Another advantage of writing the entire model in JAX is that it can run efficiently on TPUs and GPUs, while most traditional climate models operate on CPUs.

II. Prediction Accuracy Superior to Current State-of-the-Art Models

The paper shows that the deterministic model of NeuralGCM (which outputs a single, definite prediction) performs comparably to current state-of-the-art models at a resolution of 0.7°, achieving weather forecast accuracy for up to 5 days.

Since deterministic models provide only one prediction result, they may not fully represent the diversity of the future state of the climate system. Therefore, ensemble forecasting has been introduced in climate predictions, which generates a series of possible weather scenarios based on slightly different initial conditions. By integrating these scenarios, ensemble forecasts produce probabilistic weather predictions, which are typically more accurate than deterministic forecasts. The paper states that the 1.4° resolution ensemble forecast model of NeuralGCM outperforms current state-of-the-art models in terms of forecast accuracy for 5 to 15 days.

In addition, NeuralGCM also demonstrates higher accuracy for long-term climate predictions compared to the current state-of-the-art models. When predicting temperatures over a 40-year period from 1980 to 2020, the average error of NeuralGCM's 2.8° deterministic model is only 0.25 degrees Celsius, which is one-third of the error from the Atmospheric Model Intercomparison Project (AMIP).

III. Completing a Year of Atmospheric Dynamics Simulation in 8 Minutes

Hoyer stated that the computational speed of NeuralGCM is several orders of magnitude faster than traditional GCMs, with significantly lower computational costs. The 1.4° model of NeuralGCM is over 3,500 times faster than the high-precision climate model X-SHiELD. In other words, researchers require 20 days to simulate a year of atmospheric dynamics using X-SHiELD, while it takes only 8 minutes with NeuralGCM.

Furthermore, researchers need to request access to a supercomputer with 13,000 CPUs to run X-SHiELD, whereas running NeuralGCM only requires a computer with a single TPU. Hoyer mentioned that the computational cost of using NeuralGCM for climate simulations is one ten-thousandth that of using X-SHiELD.

Towards a More Open, Fast, and Efficient Climate Prediction Model

The Google Research team has made the source code and model weights of NeuralGCM publicly available on GitHub for non-commercial use. Hoyer expressed that Google hopes researchers worldwide will actively participate in testing and improving the model. NeuralGCM can run on a laptop, so they also hope more climate researchers will utilize this model in their work.

Currently, NeuralGCM only simulates the Earth's atmosphere, but Google hopes to incorporate other climate systems, such as oceans and carbon cycles, into the model in the future. Although NeuralGCM is not yet a complete climate model, its emergence provides new ideas for climate prediction, and we expect to see AI further improve the accuracy and speed of climate forecasts in the future.